第4章 集群监控

k8s集群监控介绍

前面我们学习了如何监控k8s里的应用,但是k8s集群自己的状态信息监控也很重要,比如说以下资源信息:

k8s节点的运行状态:内存,cpu,磁盘,网络等。

k8s组件的运行状态:kube-apiserver,kube-controller,kube-scheduler,coredns等。

k8s资源的运行状态:Deployment,Daemoset,Service,Ingress等。

对于k8s集群的监控,主要有以下几种方案:

cAdvisor: 监控容器内部的资源使用情况,已经集成在kubelet里了

kube-state-metrics: 监控k8s资源的使用状态,比如Deployment,Pod副本状态等

metrics-server: 监控k8s的cpu,内存,磁盘,网络等状态

k8s节点监控

传统架构监控节点运行状态我们有非常成熟的zabbix可以使用,在k8s里我们可以直接使用prometheus官方的node-exporter来获取节点的各种指标,比如cpu,mem,disk,net等信息。

项目地址:

https://github.com/prometheus/node_exporter

因为是节点监控,所以我们采用DaemonSet模式,在每个节点都部署 node-exporter

cat > prom-node-exporter.yaml << 'EOF'

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: prom

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

hostPID: true #使用主机的 pid 命名空间

hostIPC: true #使用主机的 ipc 命名空间

hostNetwork: true #用主机的网络命名空间

nodeSelector:

kubernetes.io/os: linux

containers:

- name: node-exporter

image: prom/node-exporter:latest

args:

- --web.listen-address=$(HOSTIP):9100 #监控指标数据的地址端口号

- --path.procfs=/host/proc #监控proc路径

- --path.sysfs=/host/sys #监控sys路径

- --path.rootfs=/host/root #监控/rootfs路径

- --no-collector.hwmon #禁用不需要的一些采集器

- --no-collector.nfs

- --no-collector.nfsd

- --no-collector.nvme

- --no-collector.dmi

- --no-collector.arp

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+)($|/) #忽略监控的磁盘信息

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$ #忽略监控的文件系统

ports:

- containerPort: 9100

env:

- name: HOSTIP

valueFrom:

fieldRef:

fieldPath: status.hostIP #读取node本身的IP地址

resources:

requests:

cpu: 150m

memory: 180Mi

limits:

cpu: 150m

memory: 180Mi

securityContext: #安全上下文,用于定义Container的权限和访问控制

runAsNonRoot: true #容器不以root运行

runAsUser: 65534 #使用指定的uid用户运行

volumeMounts:

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: root

mountPath: /host/root

mountPropagation: HostToContainer #mountPropagation确定挂载如何从主机传播到容器

readOnly: true

tolerations: #容忍

- operator: "Exists" #Exists相当于值的通配符,因此pod可以容忍特定类别的所有污点。

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: root

hostPath:

path: /

EOF

配置文件解释:

#获取pod信息

https://kubernetes.io/docs/tasks/inject-data-application/environment-variable-expose-pod-information/

env:

- name: HOSTIP

valueFrom:

fieldRef:

fieldPath: status.hostIP #读取node本身的IP地址

--------------------------------

securityContext: #全上下文,用于定义Container的权限和访问控制

runAsNonRoot: true #容器不以root运行

runAsUser: 65534 #使用指定的uid用户运行

--------------------------------

tolerations: #容忍

- operator: "Exists" #Exists相当于值的通配符,因此pod可以容忍特定类别的所有污点。

--------------------------------

securityContext: #全上下文,用于定义Container的权限和访问控制

runAsNonRoot: true #容器不以root运行

runAsUser: 65534 #使用指定的uid用户运行

--------------------------------

hostPID: true #使用主机的 pid 命名空间

hostIPC: true #使用主机的 ipc 命名空间

hostNetwork: true #用主机的网络命名空间

应用资源配置

[root@node1 prom]# kubectl apply -f prom-node-exporter.yaml

daemonset.apps/node-exporter created

查看pod创建状态

[root@node1 prom]# kubectl -n prom get pod

NAME READY STATUS RESTARTS AGE

mysql-dp-79b48cff96-m96bz 2/2 Running 0 15m

node-exporter-7zhjf 1/1 Running 0 41s

node-exporter-kzqvz 1/1 Running 0 41s

node-exporter-zbsrl 1/1 Running 0 41s

prometheus-796566c67c-lhrns 1/1 Running 0 60m

k8s服务发现

我们已经在每个节点上部署了node-exporter,那我们要如何采集到的每个节点的数据呢?如果按照我们上面的操作方法写死IP工作量太大,写service的话只会显示一条数据,这两种方式都不太方便。那么有什么好办法可以解决这种情况吗?这种时候就需要使用prometheus的服务发现功能了。

在k8s里,prometheus通过与k8s的API集成,支持5种服务发现模式,分别为:Node、Service、Pod、Endpoints、Ingress

官方文档:

https://prometheus.io/docs/prometheus/latest/configuration/configuration/#kubernetes_sd_config

node节点自动发现

修改prometheus配置

cat > prom-cm.yml << 'EOF'

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prom

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'coredns'

static_configs:

- targets: ['10.2.0.16:9153','10.2.0.17:9153']

- job_name: 'mysql'

static_configs:

- targets: ['mysql-svc:9104']

- job_name: 'nodes'

kubernetes_sd_configs: #k8s自动服务发现

- role: node #自动发现类型为node

EOF

生效配置

[root@node1 prom]# kubectl apply -f prom-cm.yml

configmap/prometheus-config configured

[root@node1 prom]# curl -X POST "http://10.2.2.86:9090/-/reload"

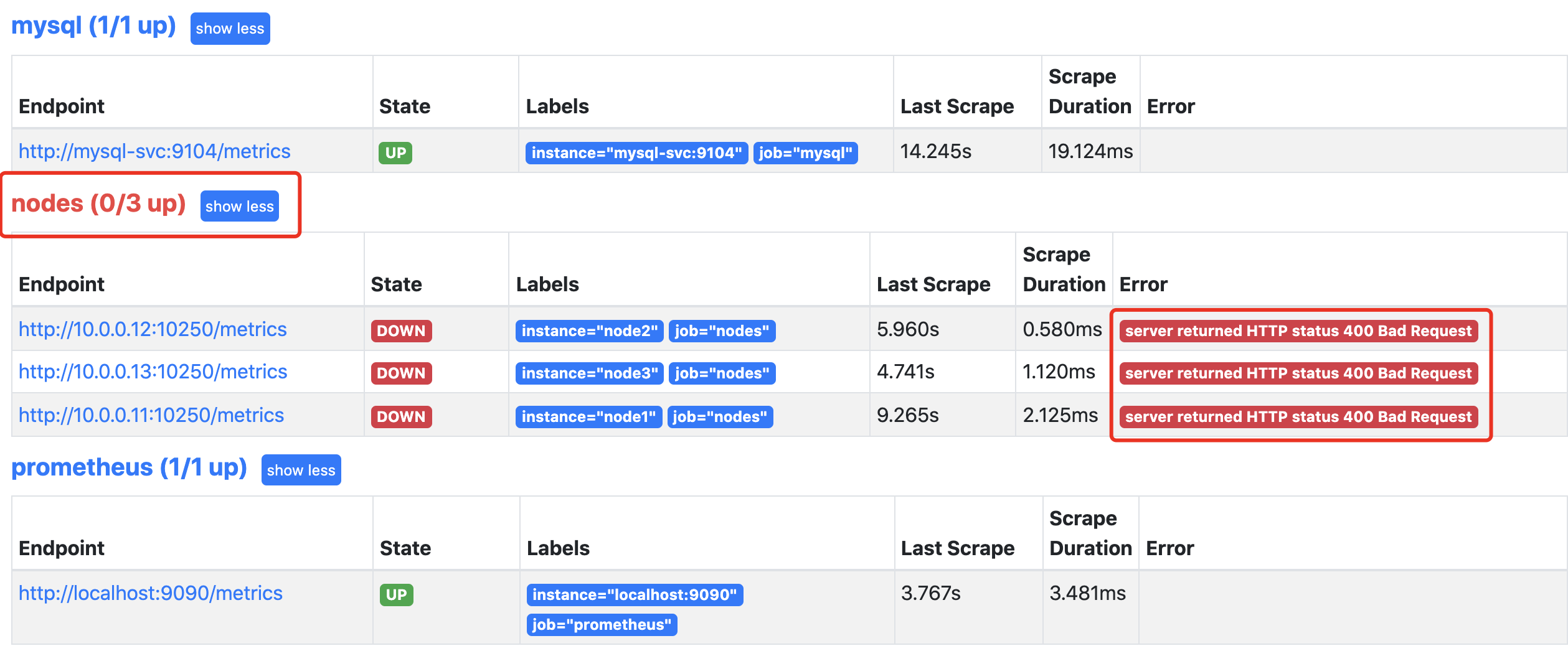

查看prometheus

这是发现提示有问题,因为prometheus默认去访问的是10250,我们需要把10250替换为9100

这里就需要使用prometheus提供的relabel_configs的replace功能。replace可以在采集数据之前通过Target的Metadata信息,动态重写Label的值。我们这里可以把_address_这个标签的端口修改为9100

relabel_config配置官方说明:

https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config

修改prometheus配置:

cat > prom-cm.yml << 'EOF'

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prom

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'coredns'

static_configs:

- targets: ['10.2.0.16:9153','10.2.0.17:9153']

- job_name: 'mysql'

static_configs:

- targets: ['mysql-svc:9104']

- job_name: 'nodes'

kubernetes_sd_configs:

- role: node

relabel_configs: #配置重写

- action: replace #基于正则表达式匹配执行的操作

source_labels: ['__address__'] #从源标签里选择值

regex: '(.*):10250' #提取的值与之匹配的正则表达式

replacement: '${1}:9100' #执行正则表达式替换的值

target_label: __address__ #结果值在替换操作中写入的标签

EOF

生效配置:

[root@node1 prom]# kubectl apply -f prom-cm.yml

configmap/prometheus-config configured

等待一会再更新

[root@node1 prom]# curl -X POST "http://10.2.2.86:9090/-/reload"

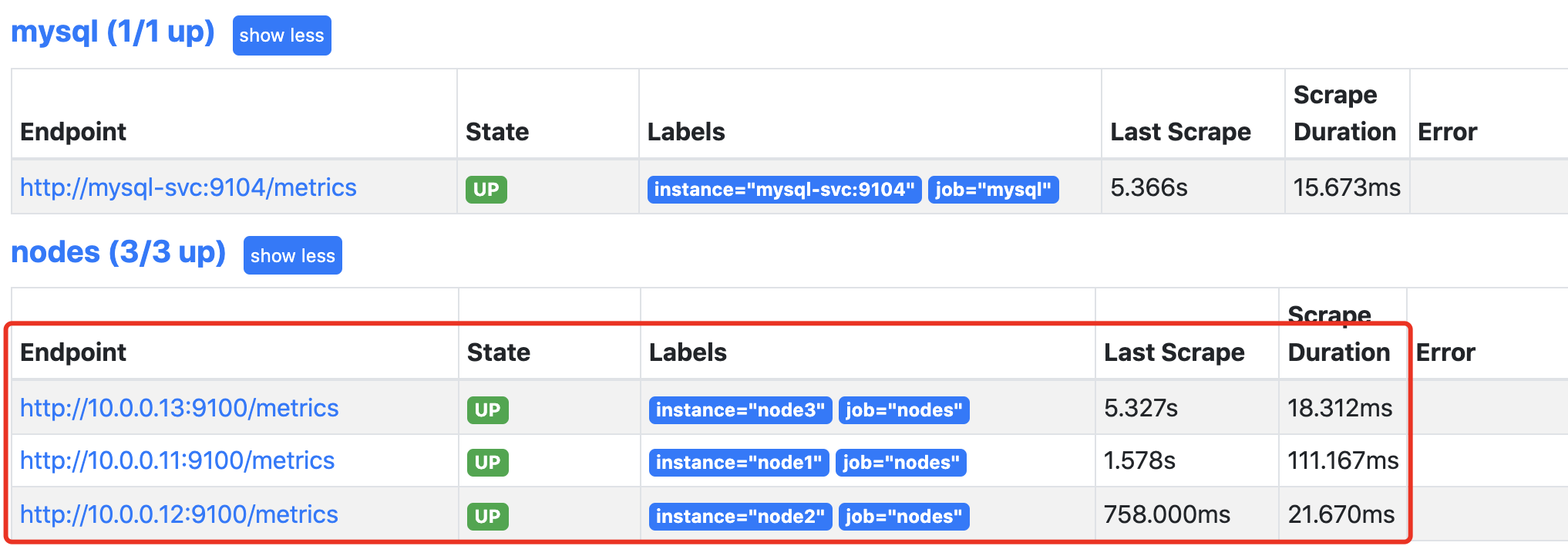

prometheus查看:

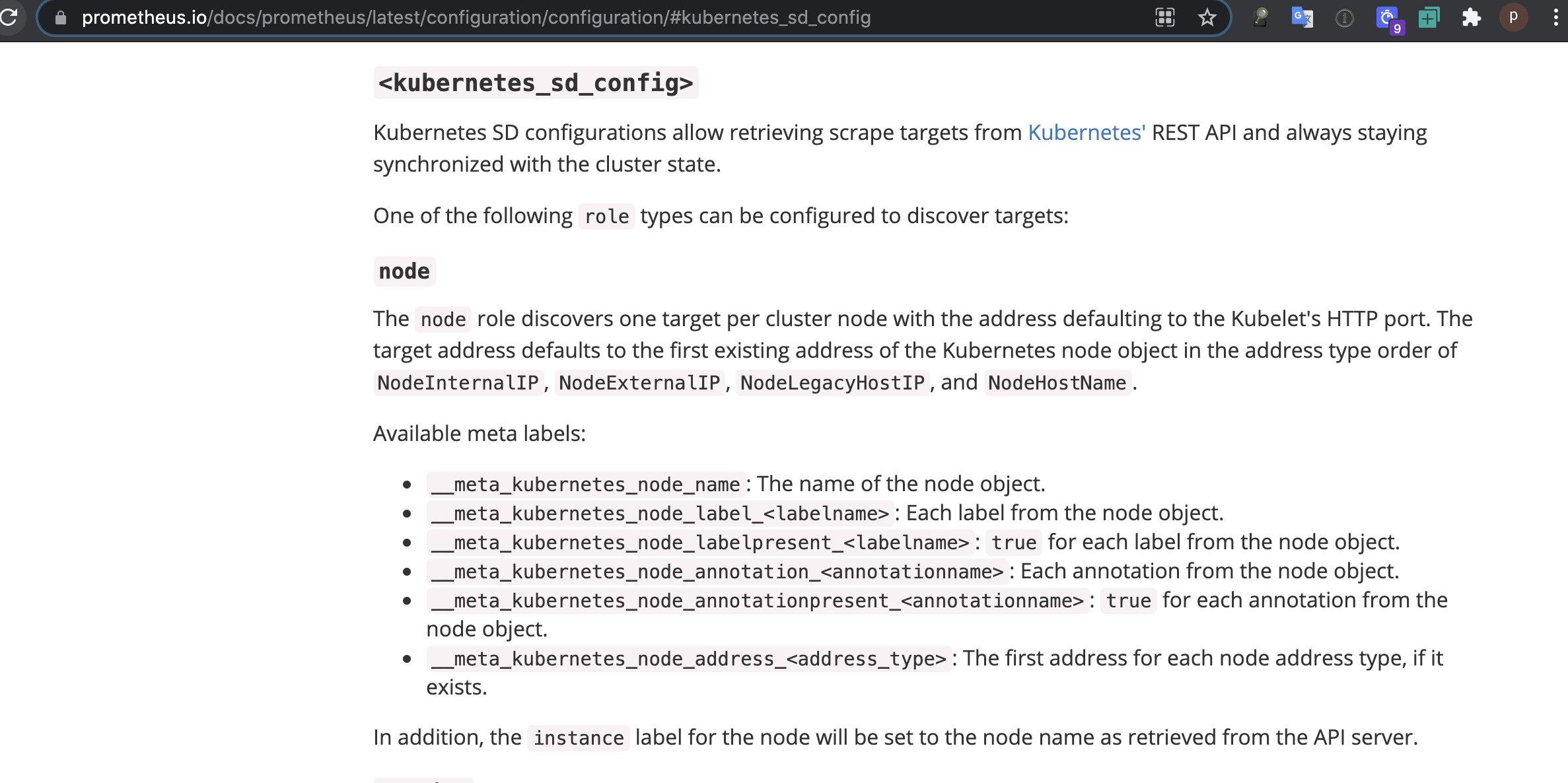

这里还有一个问题,就是节点标签目前只有主机名和任务名,不适合我们后期分组查询数据时候,所以我们需要把更多的标签添加进去,prometheus对于k8s的自动发现node模式支持以下的标签,详情可以查询官网:

https://prometheus.io/docs/prometheus/latest/configuration/configuration/#kubernetes_sd_config

标签解释:

__meta_kubernetes_node_name: 节点对象的名称。

__meta_kubernetes_node_label: 节点对象的每个标签

__meta_kubernetes_node_annotation: 每个节点的注释

__meta_kubernetes_node_address: 每个节点地址类型的第一个地址

重标记动作解释:

<relabel_action> 决定了要采取的重标记动作:

replace:将regex与source_labels拼接后匹配。然后,将target_label设置为replacement,并用replacement中的匹配组引用(${1},${2}, ...)替换为其值。如果regex不匹配,则不进行替换。lowercase:将拼接后的source_labels映射为小写。uppercase:将拼接后的source_labels映射为大写。keep:如果regex与拼接后的source_labels不匹配,则丢弃目标。drop:如果regex与拼接后的source_labels匹配,则丢弃目标。keepequal:如果拼接后的source_labels与target_label不匹配,则丢弃目标。dropequal:如果拼接后的source_labels与target_label匹配,则丢弃目标。hashmod:将target_label设置为拼接后的source_labels的哈希值的模。labelmap:将regex与所有源标签名匹配,而不仅仅是source_labels指定的那些。然后将匹配的标签的值复制到replacement给出的标签名中,匹配组引用(${1},${2}, ...)在replacement中的值将被替换。labeldrop:将regex与所有标签名匹配。任何匹配的标签都将从标签集中移除。labelkeep:将regex与所有标签名匹配。任何不匹配的标签都将从标签集中移除。

在使用 labeldrop 和 labelkeep 时,必须小心确保一旦移除了标签,指标仍然具有唯一的标签。

修改配置:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prom

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'coredns'

static_configs:

- targets: ['10.2.0.16:9153','10.2.0.17:9153']

- job_name: 'mysql'

static_configs:

- targets: ['mysql-svc:9104']

- job_name: 'nodes'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: replace

source_labels: ['__address__']

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

- action: labelmap #将正则表达式与所有标签名称匹配

regex: __meta_kubernetes_node_label_(.+) #提取符合正则匹配的标签,然后提交到Label里

配置解释:

官方说明:

https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config

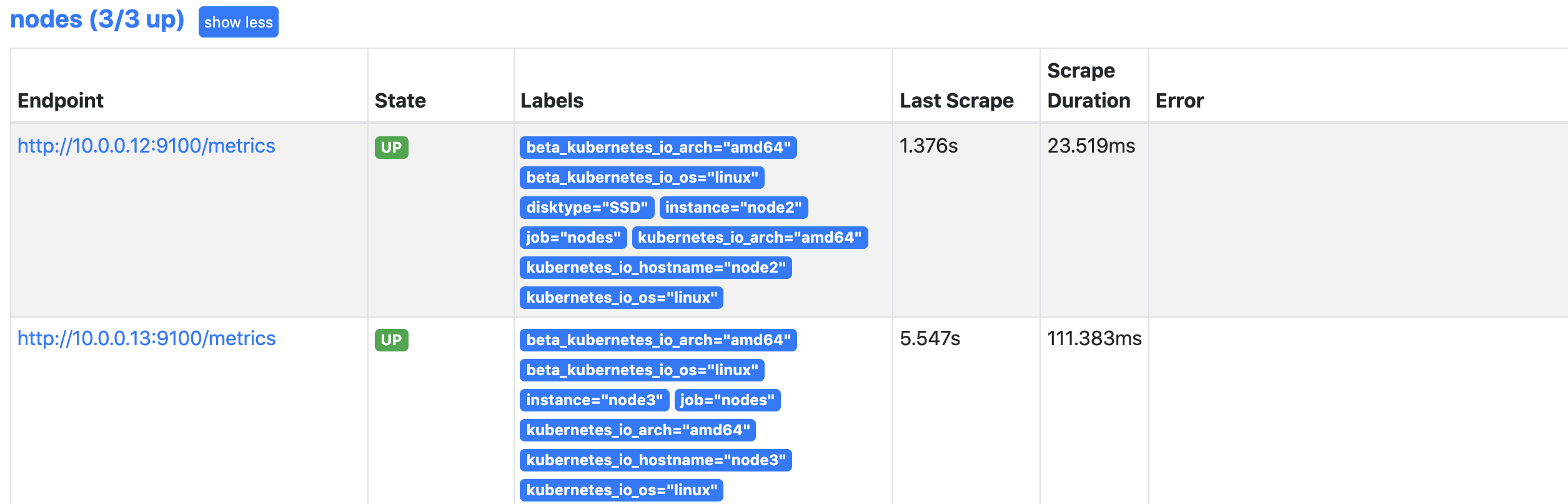

添加一个动作为labelmap,作用是将符合__meta_kubernetes_node_label_(.+)正则表达式提取的标签添加到Lable标签里。

生效配置:

[root@node1 prom]# kubectl apply -f prom-cm.yml

configmap/prometheus-config configured

等一会再更新

[root@node1 prom]# curl -X POST "http://10.2.2.86:9090/-/reload"

查看prometheus

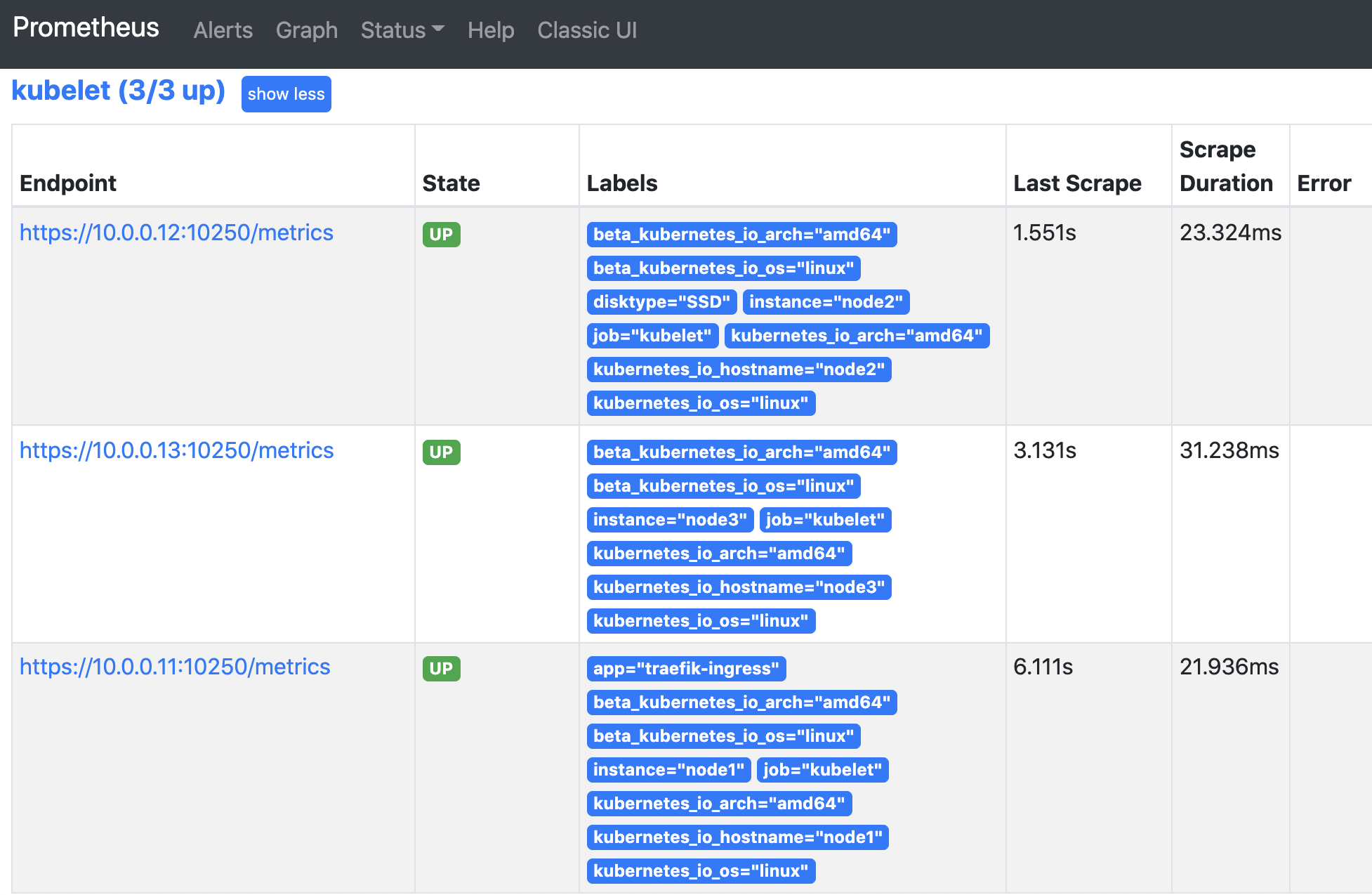

kubelet节点自动发现

prometheus自动发现kubelet访问的是10250端口,但是必须是https协议,而且必须提供证书,我们可以直接使用k8s的证书。除此之外访问集群资源还需要相应的权限,还需要带上我们刚才为prometheus创建的service-account-token,实际上我们为prometheus创建的RBAC资源产生的secrets会以文件挂载的形式挂载到Pod里,所以我们查询的时候只要带上这个token就具备了查询集群资源的权限。另外我们还设置了跳过证书检查。

资源配置如下:

cat > prom-cm.yml << 'EOF'

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prom

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'coredns'

static_configs:

- targets: ['10.2.0.16:9153','10.2.0.17:9153']

- job_name: 'mysql'

static_configs:

- targets: ['mysql-svc:9104']

- job_name: 'nodes'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: ['__address__']

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

EOF

配置解释:

- job_name: 'kubelet'

kubernetes_sd_configs:

- role: node

scheme: https #配置用于请求的协议

tls_config: #tls配置

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt #ca证书

insecure_skip_verify: true #禁用证书检查

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token #serviceaccount授权,默认securt会以文件形式挂载到pod里

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

应用配置:

[root@node1 prom]# kubectl apply -f prom-cm.yml

configmap/prometheus-config configured

稍等一会配置

[root@node1 prom]# curl -X POST "http://10.2.2.86:9090/-/reload"

查看效果:

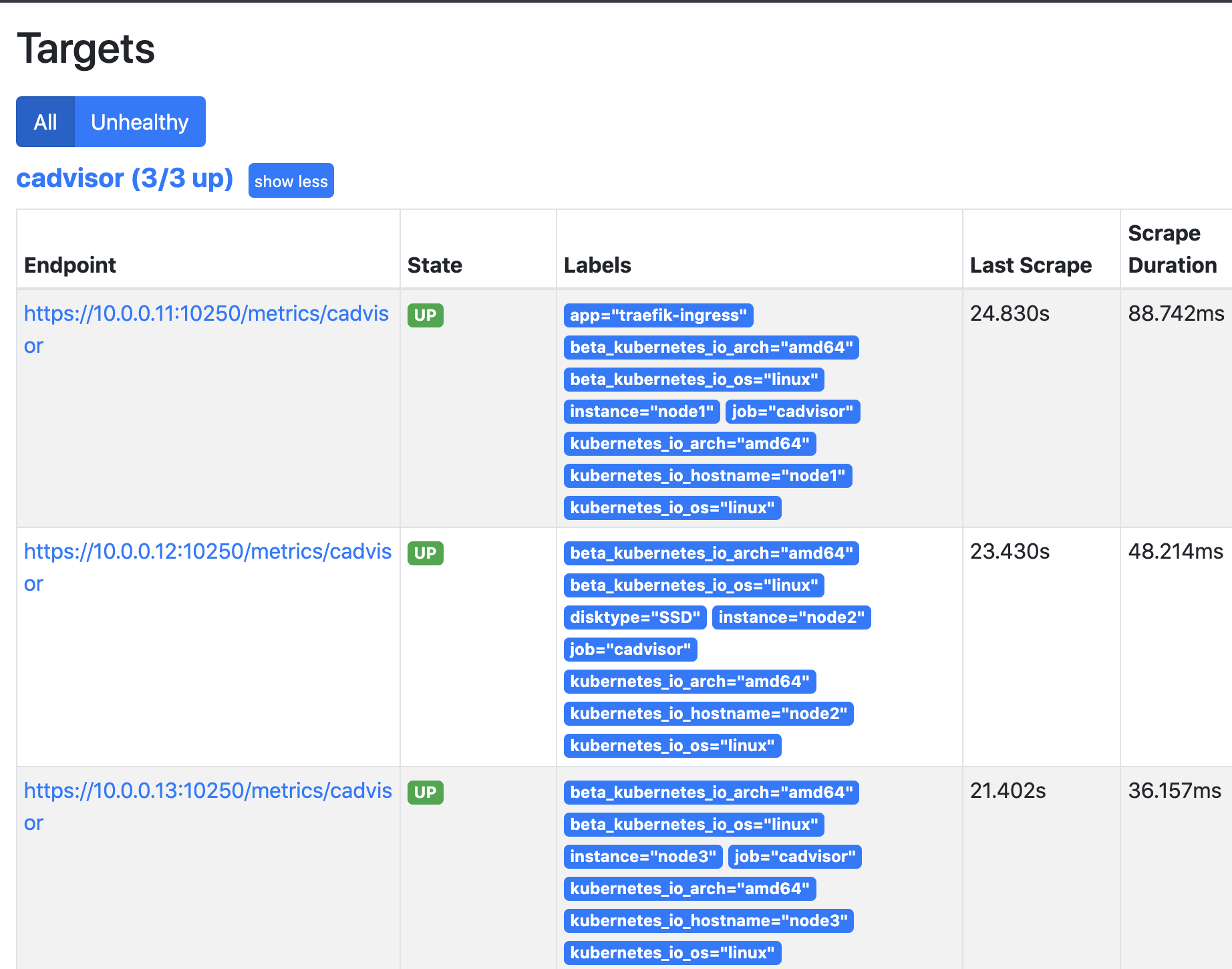

Contarner容器自动发现

学习Docker的时候我们已经知道收集docker容器使用的是cAdvisor,而k8s的kubelet已经内置了cAdvisor。所以我们只需要访问即可,这里我们可以使用访问kubelet的暴露的地址访问cAdvisor数据。只需要在原有发现的地址基础上再添加一层目录即可 /metrics/cadvisor

配置文件:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prom

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'coredns'

static_configs:

- targets: ['10.2.0.2:9153', '10.2.1.61:9153']

- job_name: 'mysql'

static_configs:

- targets: ['mysql-svc.default:9104']

- job_name: 'nodes'

kubernetes_sd_configs: #k8s自动服务发现

- role: node #自动发现类型为node

relabel_configs:

- action: replace

source_labels: ['__address__'] #需要修改的源标签

regex: '(.*):10250' #正则表达式(10.0.0.10):10250

replacement: '${1}:9100' #替换后的内容10.0.0.10:9100

target_label: __address__ #将替换后的内容覆盖原来的标签

- action: labelmap #将正则表达式与所有标签名称匹配

regex: __meta_kubernetes_node_label_(.+) #提取符合正则匹配的标签,然后天交到Label里

- job_name: 'kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

replacement: $1

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

replacement: /metrics/cadvisor

target_label: __metrics_path__

应用配置:

[root@node1 prom]# kubectl apply -f prom-cm.yml

configmap/prometheus-config configured

[root@node1 prom]# curl -X POST "http://10.2.2.86:9090/-/reload"

查看结果:

关于查询的数据可以查看官网的说明:

https://github.com/google/cadvisor/blob/master/docs/storage/prometheus.md

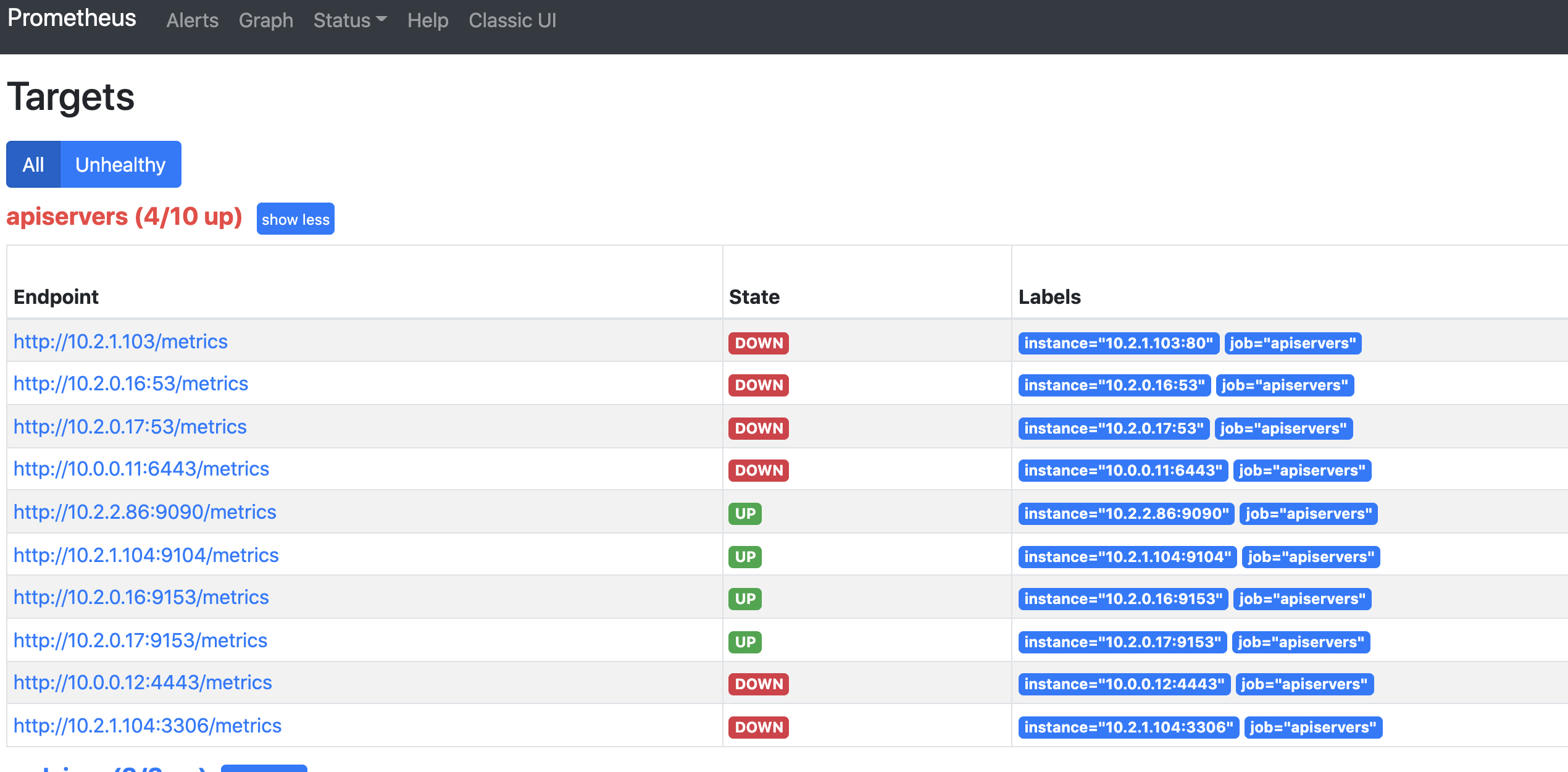

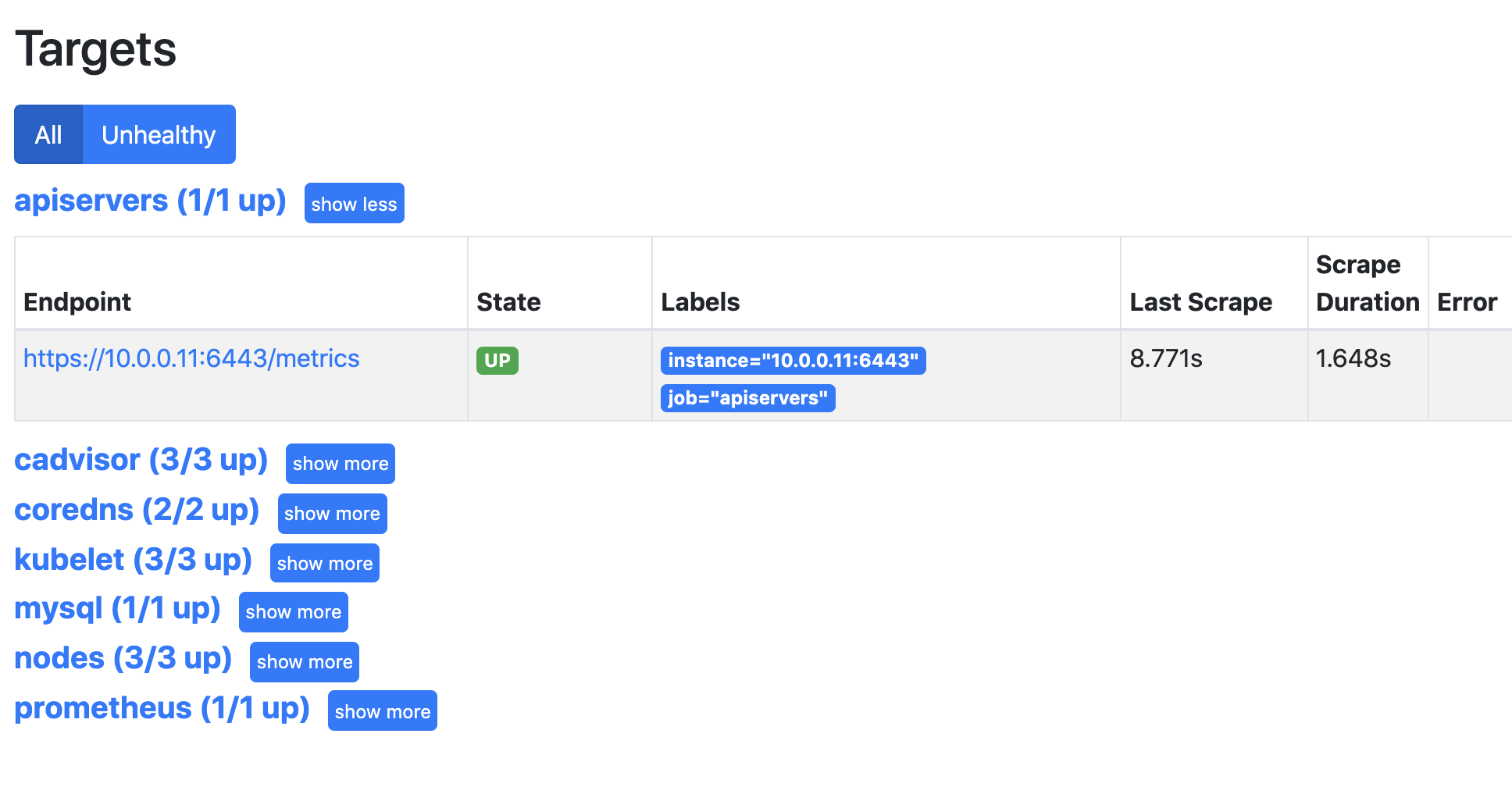

APIServer服务自动发现

API Server是k8s的核心组件,对于API Server的监控我们可以直接通过k8s的Service来获取:

[root@node1 prom]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 10d

修改配置:

- job_name: 'apiservers'

kubernetes_sd_configs:

- role: endpoints

应用配置:

kubectl apply -f prom-cm.yml

curl -X POST "http://10.2.2.86:9090/-/reload"

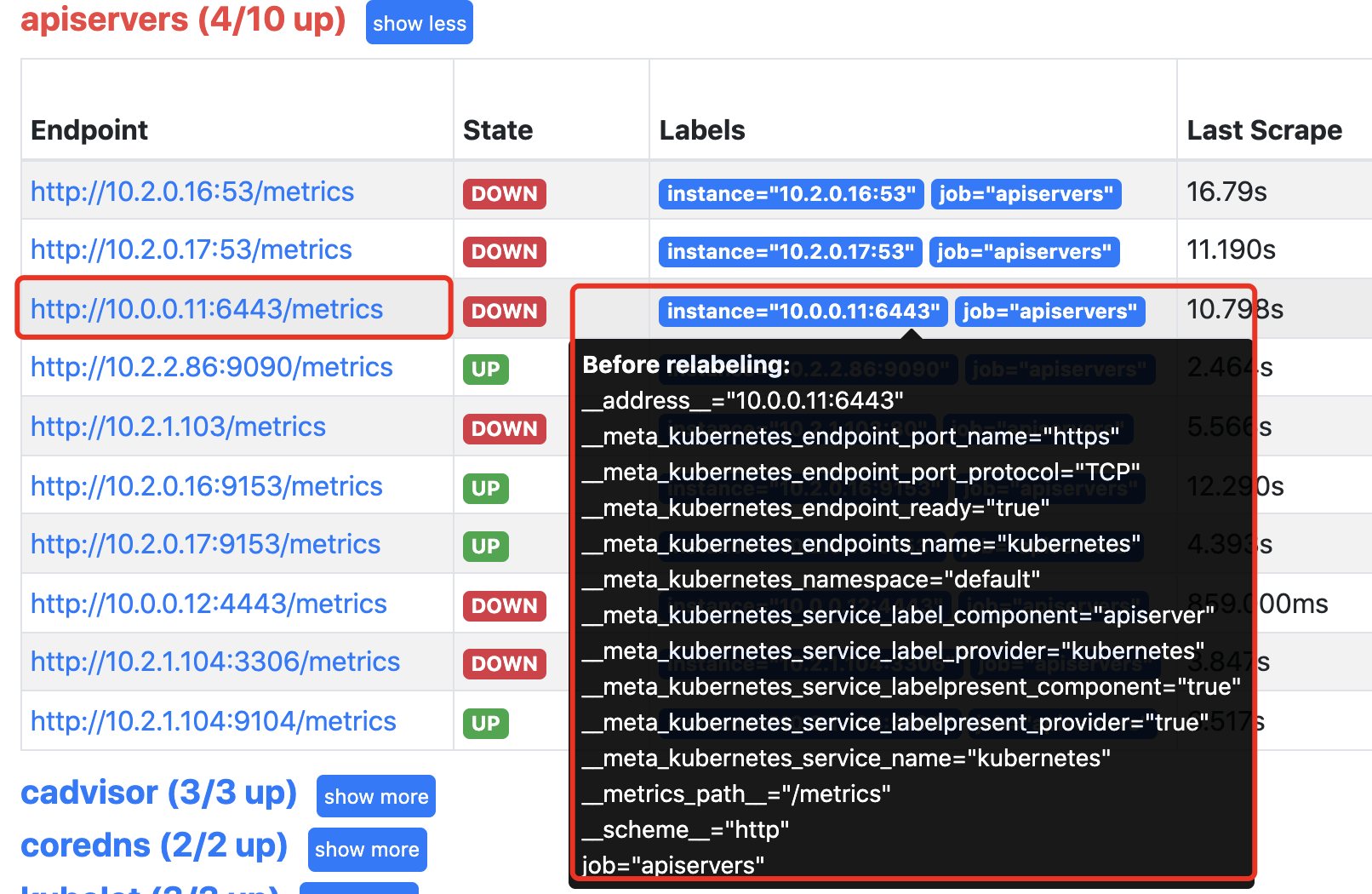

查看结果:

这时我们发现promtheus把所有的endpoint都找出来了,那么哪个才是我们需要的呢?通过查看API Server的svc可以发现API Server的通讯端口是6443,所以6443端口的服务才是我们需要的。

[root@node1 prom]# kubectl describe svc kubernetes

Name: kubernetes

Namespace: default

Labels: component=apiserver

provider=kubernetes

Annotations: <none>

Selector: <none>

Type: ClusterIP

IP: 10.1.0.1

Port: https 443/TCP

TargetPort: 6443/TCP

Endpoints: 10.0.0.11:6443

Session Affinity: None

Events: <none>

要想保留我们发现的的API Server,那么就需要查看他都有什么标签,然后将拥有这些标签的服务保留下来。

我们需要匹配的条件是标签名为__meta_kubernetes_service_label_component 的值为"apiserver"的服务。

因为这个端口是https协议的,所以我们还需要带上认证的证书。

修改配置文件:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prom

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'coredns'

static_configs:

- targets: ['10.2.0.16:9153','10.2.0.17:9153']

- job_name: 'mysql'

static_configs:

- targets: ['mysql-svc:9104']

- job_name: 'nodes'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: ['__address__']

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

replacement: $1

- source_labels: [__meta_kubernetes_node_name]

regex: (.*)

replacement: /metrics/cadvisor

target_label: __metrics_path__

- job_name: 'apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_component]

action: keep

regex: apiserver

应用修改:

kubectl apply -f prom-cm.yml

curl -X POST "http://10.2.2.86:9090/-/reload"

查看结果:

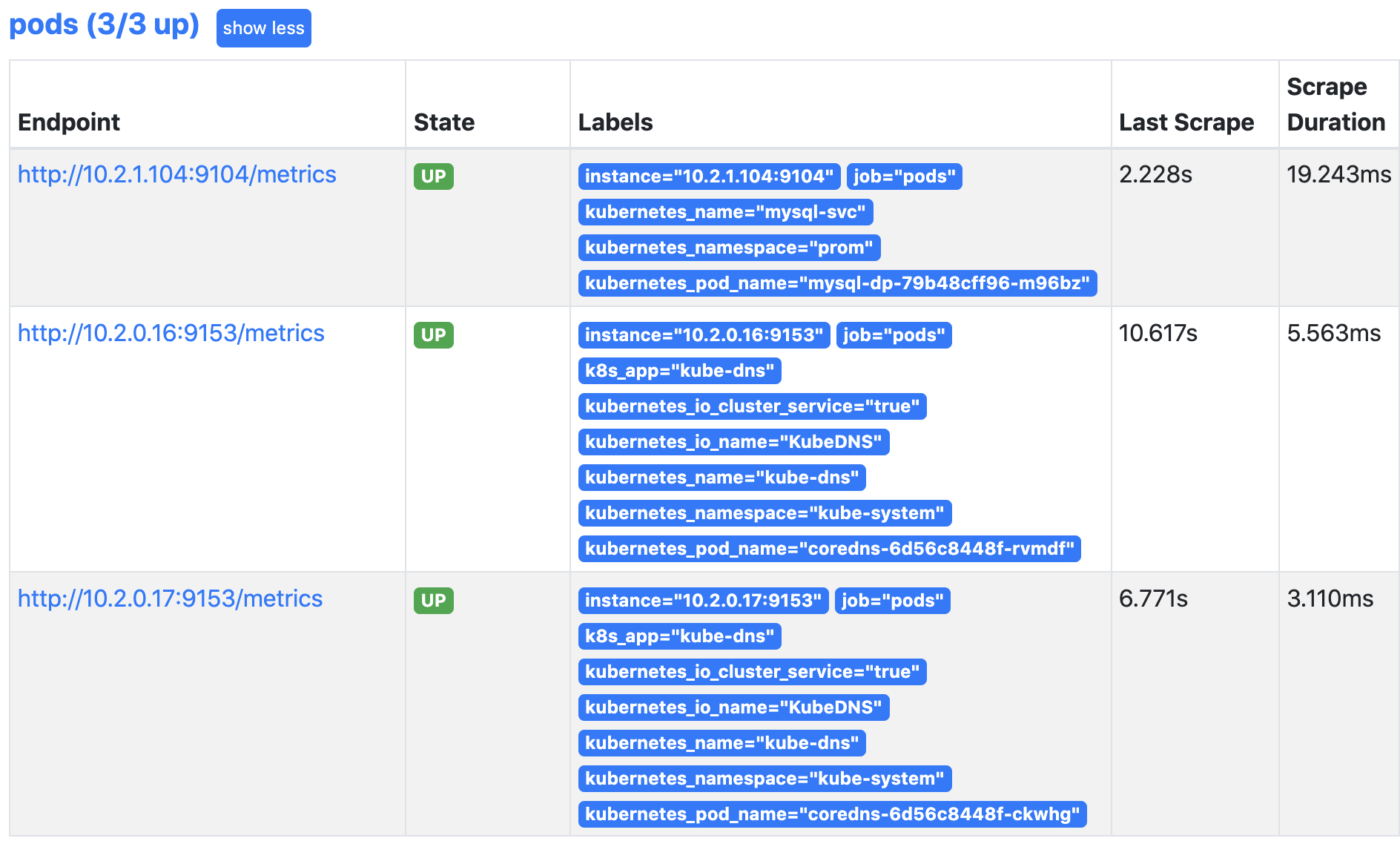

Pod自动发现

我们这里采集到的Pod监控也是使用自动发现Endpoints。只不过这里需要做一些匹配的处理.

配置如下:

- job_name: 'pods'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

生效配置:

kubectl apply -f prom-cm.yml

curl -X POST "http://10.2.2.86:9090/-/reload"

查看效果:

匹配参数解释:

__meta_kubernetes_service_annotation_prometheus_io_scrape 为 true

__address__的端口和__meta_kubernetes_service_annotation_prometheus_io_port 的端口一样

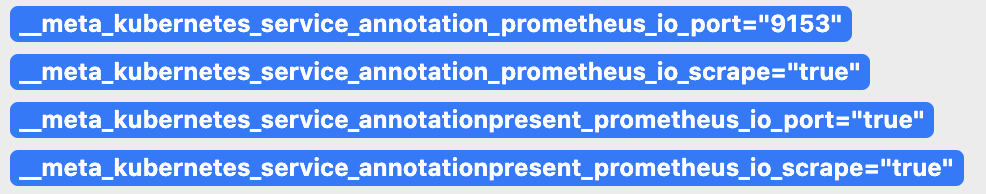

自动发现原理:我们创建svc的时候添加了prometheus和metrics端口的注解,这样就能被prometheus自动发现到

[root@node1 prom]# kubectl -n kube-system get svc kube-dns -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: KubeDNS

对应promethues自动发现里的数据:

那也就意味着以后发布的应用,我们只需要添加这两条注解就可以被自动发现了,现在我们可以来修改下刚才创建的mysql配置,添加相关注解就可以自动被发现了。

---

kind: Service

apiVersion: v1

metadata:

name: mysql-svc

namespace: prom

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9104"

spec:

selector:

app: mysql

ports:

- name: mysql

port: 3306

targetPort: 3306

- name: mysql-prom

port: 9104

targetPort: 9104

查看prometheus可以发现mysql的pod已经自动被发现了

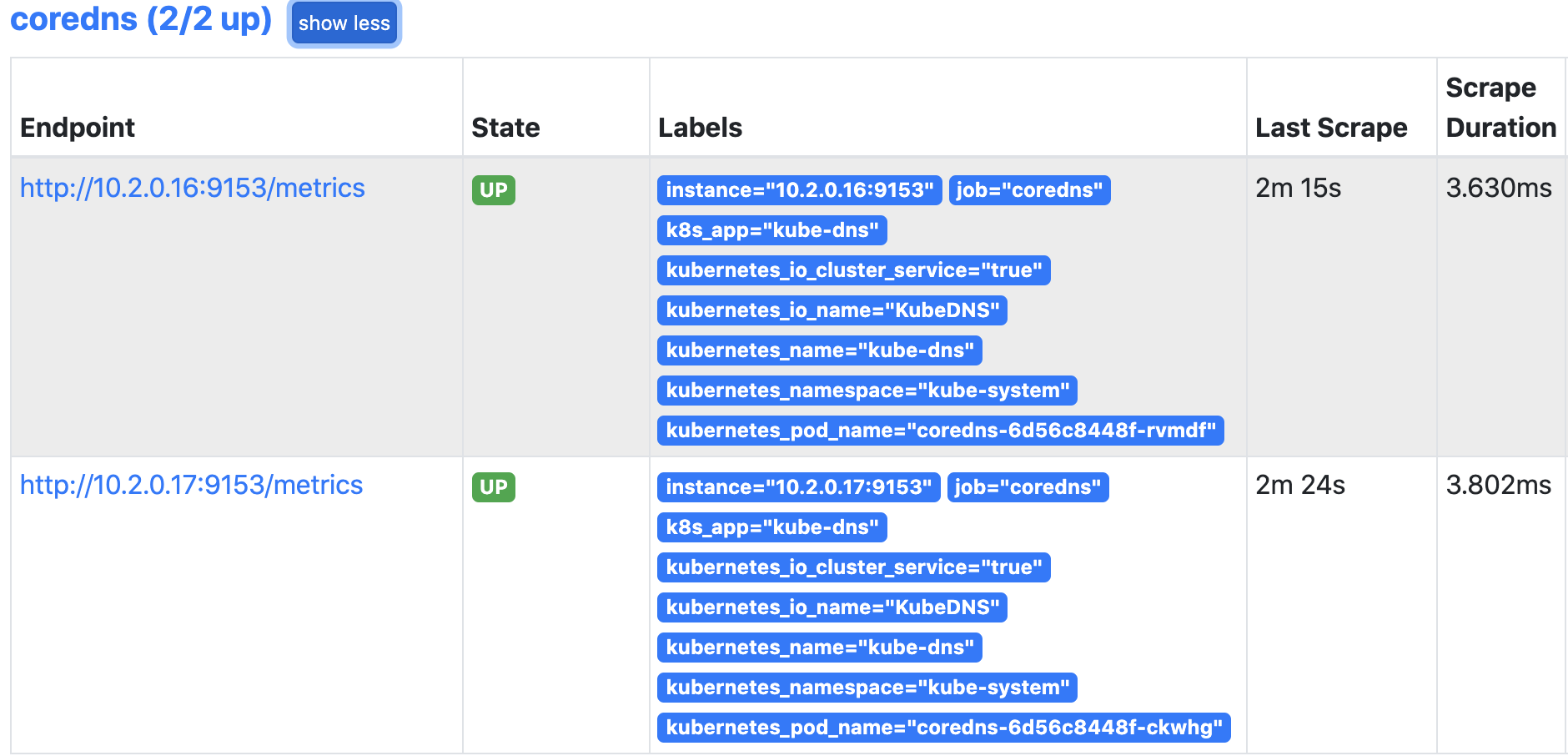

现在就可以删除刚才我们配置的静态mysql了,同理,我们刚才静态配置的coredns也可以删掉了,同样使用自动发现来处理,配置如下:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prom

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

#- job_name: 'coredns'

# static_configs:

# - targets: ['10.2.0.16:9153','10.2.0.17:9153']

#- job_name: 'mysql'

# static_configs:

# - targets: ['mysql-svc:9104']

- job_name: 'nodes'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: ['__address__']

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

replacement: $1

- source_labels: [__meta_kubernetes_node_name]

regex: (.*)

replacement: /metrics/cadvisor

target_label: __metrics_path__

- job_name: 'apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_component]

action: keep

regex: apiserver

- job_name: 'pods'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'coredns'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_endpoints_name]

action: keep

regex: kube-dns

应用配置:

kubectl apply -f prom-cm.yml

curl -X POST "http://10.2.2.86:9090/-/reload"

查看结果:

k8s资源对象监控

介绍

我们刚才自动发现使用的是endpoints,监控的都是应用数据以,但是在k8s内部的pod,deployment,daemonset等资源也需要监控,比如当前有多少个pod,pod状态是什么样等等。

这些指标数据需要新的exporter来提供,那就是kube-state-metrics

项目地址:

https://github.com/kubernetes/kube-state-metrics

安装

克隆代码:

git clone https://github.com/kubernetes/kube-state-metrics.git

修改配置:

这里我们主要修改镜像地址和添加自动发现的注解

cd kube-state-metrics/examples/standard

vim deployment.yaml

-------------------

- image: bitnami/kube-state-metrics:2.1.1

-------------------

vim service.yaml

-------------------

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.1.1

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8080"

name: kube-state-metrics

namespace: kube-system

......................

-------------------

创建资源配置:

[root@node1 standard]# kubectl apply -f ./

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

serviceaccount/kube-state-metrics created

service/kube-state-metrics created

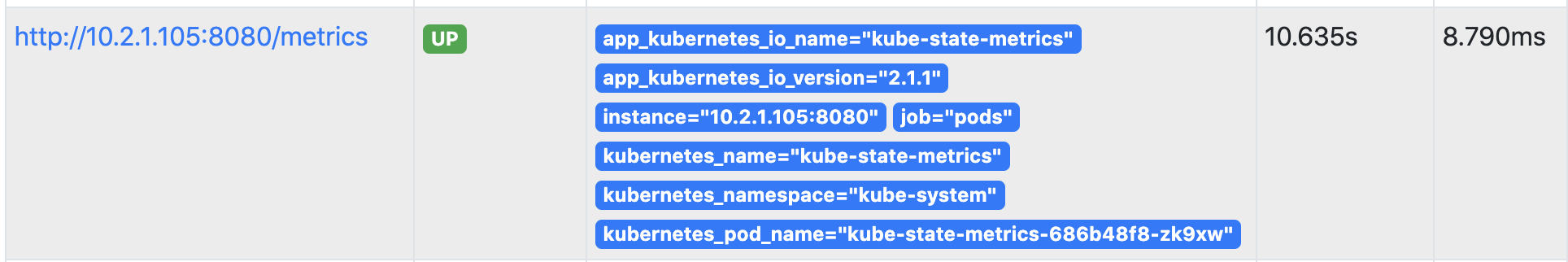

查看promtheus:

应用场景:

存在执行失败的Job: kube_job_status_failed

集群节点状态错误: kube_node_status_condition{condition="Ready", status!="true"}==1

集群中存在启动失败的 Pod:kube_pod_status_phase{phase=~"Failed|Unknown"}==1

最近30分钟内有 Pod 容器重启: changes(kube_pod_container_status_restarts_total[30m])>0

更多使用方法可以查看官方文档:

https://github.com/kubernetes/kube-state-metrics

更新: 2024-09-21 16:11:33