第4章 MongoDB集群分片

分片技术概述

分片的必要性

为什么需要分片?

- 有了副本集,为什么还要分片?

- 副本集资源利用率不高

- 主库读写压力大

分片优缺点对比:

| 类型 | 具体说明 |

|---|---|

| 优点 | 资源利用率高 读写压力负载均衡 横向水平拓展 |

| 缺点 | 理想情况下需要的机器比较多 配置和运维都变得极其复杂 一定要提前规划好,一旦建立后再想改变架构变得很困难 |

分片核心概念

核心组件说明

1. 路由服务-mongos

- 不要求副本集,每个mongos都是独立的,配置一模一样

- mongos没有数据目录,不存储数据

- 路由服务,提供代理,替用户向后去请求shard分片的数据

2. 分片配置信息服务器-config

- config服务在4.x之后强制要求必须是副本集

- 保存数据分配在哪个shard上

- 保存所有shard的配置信息

- 提供给mongos查询服务

3. 片键-shard-key

- 数据放在哪个shard的分区规则

- 片键就是索引

4. 数据节点-shard

- 负责处理数据的节点,每个shard都是分片集群的一部分

片键分类与特性

HASH片键

数据类型示例:

id name host sex

1 zhang SH boy

2 ya BJ boy

3 yaya SZ girl

以id作为片键的分布:

索引:id

1 hash计算 shard1

2 hash计算 shard2

3 hash计算 shard3

hash片键特点:

- 足够随机,足够平均

区间分片

数据类型示例:

id name host sex

1 zhang SH boy

2 ya BJ boy

3 yaya SZ girl

如果以ID作为片键:

id

1-100 shard1

101-200 shard2

201-300 shard3

300+正无穷 shard4

以host作为片键:

SH shard1

BJ shard2

SZ shard3

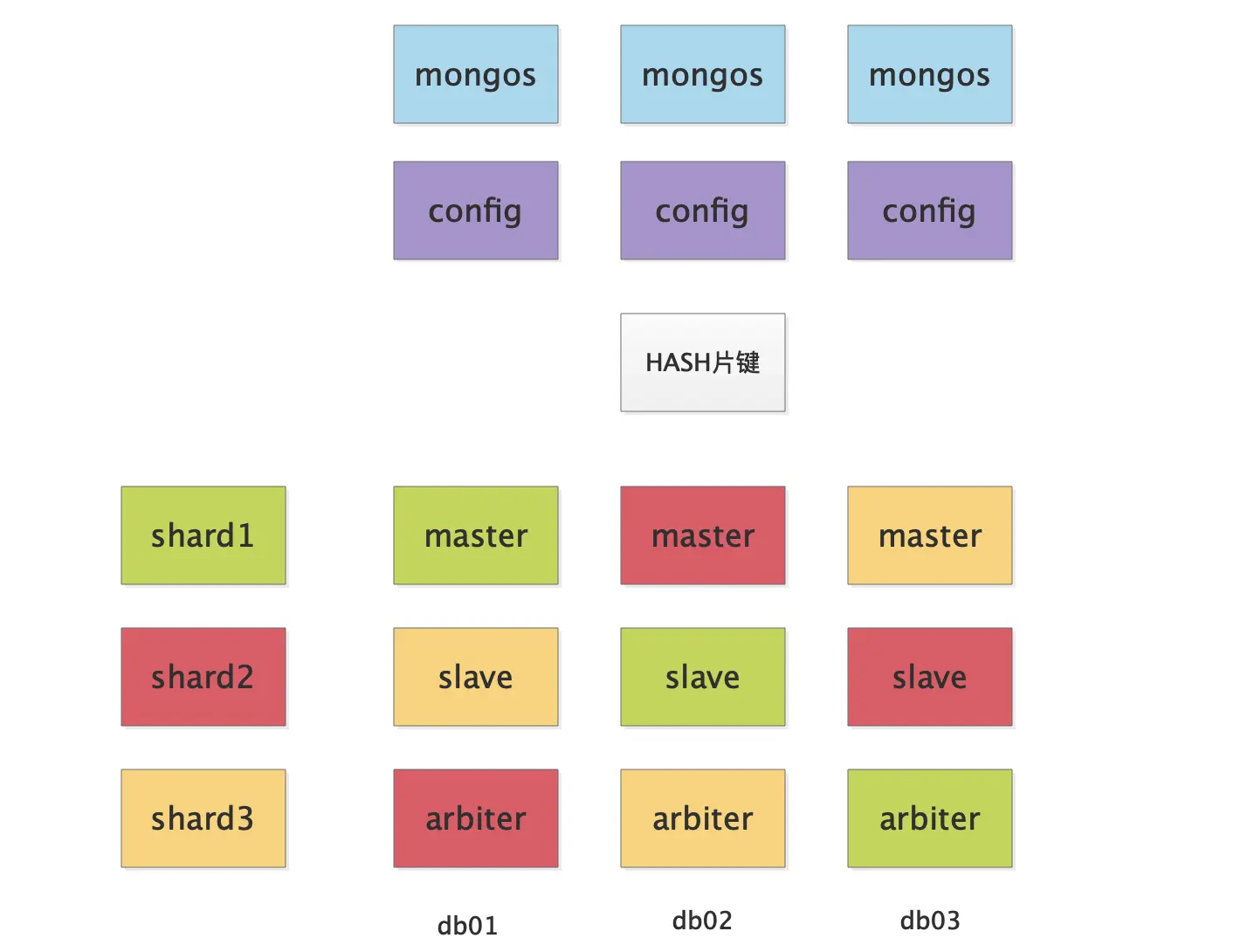

集群架构设计

分片架构图

IP端口规划

db-51 10.0.0.51

Shard1_Master 28100

Shard3_Slave 28200

Shard2_Arbiter 28300

Config Server 40000

mongos Server 60000

db-52 10.0.0.52

Shard2_Master 28100

Shard1_Slave 28200

Shard3_Arbiter 28300

Config Server 40000

mongos Server 60000

db-53 10.0.0.53

Shard3_Master 28100

Shard2_Slave 28200

Shard1_Arbiter 28300

Config Server 40000

mongos Server 60000

目录规划

服务目录:

/opt/master/{conf,log,pid}

/opt/slave/{conf,log,pid}

/opt/arbiter/{conf,log,pid}

/opt/config/{conf,log,pid}

/opt/mongos/{conf,log,pid}

数据目录:

/data/master

/data/slave

/data/arbiter

/data/config

搭建步骤概览

- 搭建部署shard副本集

- 搭建部署config副本集

- 搭建mongos

- 添加分片成员

- 数据库启动分片功能

- 集合设置片键

- 写入测试数据

- 检查分片效果

- 安装使用图形化工具

Shard副本集部署

软件安装

db-51操作:

pkill mongo

rm -rf /opt/mongo_2*

rm -rf /data/mongo_2*

rsync -avz /opt/mongodb* 10.0.0.52:/opt/

rsync -avz /opt/mongodb* 10.0.0.53:/opt/

db-52和db-53操作:

echo 'export PATH=$PATH:/opt/mongodb/bin' >> /etc/profile

source /etc/profile

创建目录-三台机器都操作

mkdir -p /opt/master/{conf,log,pid}

mkdir -p /opt/slave/{conf,log,pid}

mkdir -p /opt/arbiter/{conf,log,pid}

mkdir -p /data/master

mkdir -p /data/slave

mkdir -p /data/arbiter

db-51配置文件

master节点配置文件:

cat >/opt/master/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/master/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/master/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/master/pid/mongodb.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28100

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard1

sharding:

clusterRole: shardsvr

EOF

slave节点配置文件:

cat >/opt/slave/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/slave/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/slave/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/slave/pid/mongodb.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28200

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard3

sharding:

clusterRole: shardsvr

EOF

arbiter节点配置文件:

cat >/opt/arbiter/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/arbiter/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/arbiter/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/arbiter/pid/mongodb.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28300

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard2

sharding:

clusterRole: shardsvr

EOF

db-52配置文件

master节点配置文件:

cat >/opt/master/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/master/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/master/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/master/pid/mongodb.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28100

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard2

sharding:

clusterRole: shardsvr

EOF

slave节点配置文件:

cat >/opt/slave/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/slave/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/slave/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/slave/pid/mongodb.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28200

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard1

sharding:

clusterRole: shardsvr

EOF

arbiter节点配置文件:

cat >/opt/arbiter/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/arbiter/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/arbiter/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/arbiter/pid/mongodb.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28300

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard3

sharding:

clusterRole: shardsvr

EOF

db-53配置文件

master节点配置文件:

cat >/opt/master/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/master/log/mongod.log

storage:

journal:

enabled: true

dbPath: /data/master/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/master/pid/mongodb.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28100

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard3

sharding:

clusterRole: shardsvr

EOF

slave节点配置文件:

cat >/opt/slave/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/slave/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/slave/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/slave/pid/mongod.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28200

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard2

sharding:

clusterRole: shardsvr

EOF

arbiter节点配置文件:

cat >/opt/arbiter/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/arbiter/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/arbiter/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/arbiter/pid/mongod.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28300

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

oplogSizeMB: 1024

replSetName: shard1

sharding:

clusterRole: shardsvr

EOF

系统优化-三台都操作

echo "never" > /sys/kernel/mm/transparent_hugepage/enabled

echo "never" > /sys/kernel/mm/transparent_hugepage/defrag

启动服务-三台都操作

mongod -f /opt/master/conf/mongod.conf

mongod -f /opt/slave/conf/mongod.conf

mongod -f /opt/arbiter/conf/mongod.conf

ps -ef|grep mongod

副本集初始化

db-51 master节点初始化副本集:

mongo --port 28100

rs.initiate()

等一下变成PRIMARY

rs.add("10.0.0.52:28200")

rs.addArb("10.0.0.53:28300")

rs.status()

db-52 master节点初始化副本集:

mongo --port 28100

rs.initiate()

等一下变成PRIMARY

rs.add("10.0.0.53:28200")

rs.addArb("10.0.0.51:28300")

rs.status()

db-53 master节点初始化副本集:

mongo --port 28100

rs.initiate()

等一下变成PRIMARY

rs.add("10.0.0.51:28200")

rs.addArb("10.0.0.52:28300")

rs.status()

Config副本集部署

创建目录-三台机器都操作

mkdir -p /opt/config/{conf,log,pid}

mkdir -p /data/config/

创建配置文件-三台机器都操作

cat >/opt/config/conf/mongod.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/config/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /data/config/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /opt/config/pid/mongod.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 40000

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

replication:

replSetName: configset

sharding:

clusterRole: configsvr

EOF

启动服务-三台机器都操作

mongod -f /opt/config/conf/mongod.conf

ps -ef|grep mongod

初始化副本集-db-51操作即可

mongo --port 40000

rs.initiate()

等一下变成PRIMARY

rs.add("10.0.0.52:40000")

rs.add("10.0.0.53:40000")

rs.status()

Mongos路由与分片配置

创建目录-三台机器都操作

mkdir -p /opt/mongos/{conf,log,pid}

创建配置文件-三台机器都操作

cat >/opt/mongos/conf/mongos.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /opt/mongos/log/mongos.log

processManagement:

fork: true

pidFilePath: /opt/mongos/pid/mongos.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 60000

bindIp: 127.0.0.1,$(ifconfig eth0|awk 'NR==2{print $2}')

sharding:

configDB:

configset/10.0.0.51:40000,10.0.0.52:40000,10.0.0.53:40000

EOF

启动服务-三台机器都操作

mongos -f /opt/mongos/conf/mongos.conf

登录mongos-db-51操作

mongo --port 60000

添加分片成员

登录mongos添加shard成员信息-db-51操作:

mongo --port 60000

use admin

db.runCommand({addShard:'shard1/10.0.0.51:28100,10.0.0.52:28200,10.0.0.53:28300'})

db.runCommand({addShard:'shard2/10.0.0.52:28100,10.0.0.53:28200,10.0.0.51:28300'})

db.runCommand({addShard:'shard3/10.0.0.53:28100,10.0.0.51:28200,10.0.0.52:28300'})

查看分片成员信息:

db.runCommand({ listshards : 1 })

Hash分片配置

数据库开启分片:

mongo --port 60000

use admin

db.runCommand( { enablesharding : "luffy" } )

创建索引-片键: 给luffy库下的hash集合创建索引,索引字段为id,索引类型为hash类型

use luffy

db.hash.ensureIndex( { id: "hashed" } )

集合开启hash分片:

use admin

sh.shardCollection( "luffy.hash",{ id: "hashed" } )

测试数据验证

生成测试数据:

mongo --port 60000

use luffy

for(i=1;i<10000;i++){db.hash.insert({"id":i,"name":"BJ","age":18});}

分片验证数据:

shard1:

mongo --port 28100

use luffy

db.hash.count()

3349

shard2:

mongo --port 28100

use luffy

db.hash.count()

3366

shard3:

mongo --port 28100

use luffy

db.hash.count()

3284

分片管理命令

列出分片所有详细信息:

mongo --port 60000

db.printShardingStatus()

sh.status()

列出所有分片成员信息:

mongo --port 60000

use admin

db.runCommand({ listshards : 1})

列出开启分片的数据库:

mongo --port 60000

use config

db.databases.find({"partitioned": true })

查看分片的片键:

mongo --port 60000

use config

db.collections.find().pretty()

集群启动顺序

正确的启动顺序:

所有的config

所有的mongos

所有的master

所有的arbiter

所有的slave

分片集群最佳实践

生产环境建议:

| 配置项 | 推荐设置 | 说明 |

|---|---|---|

| 机器数量 | 至少9台 | 每个shard 3台,config 3台 |

| 片键选择 | 基数高、分布均匀 | 避免热点数据集中 |

| 监控告警 | 分片均衡、性能指标 | 及时发现数据倾斜 |

| 备份策略 | 全量+增量备份 | 确保数据安全 |

架构提醒:分片集群搭建复杂,一定要提前规划好架构,建立后再想改变变得很困难。

更新: 2025-01-26